SmartQA is beautiful combination of thinking styles, a mindset of brilliance and minimalism,

Continue reading8 things I do to solve problems creatively

Over the years there are a few things that I do consistently to solve problems technical or business.

Continue readingManagement expectations of CIO & IT team

Whether the end-user organization is small or large, the challenges remain the same for both of them.

Continue readingDFT & Automation

In this smartbits video “Design for Testability& Automation” Girish Elchuri outlines how design for testability aids in test automation.

Continue reading25 things I expect of a great tester

Here are 25 things I expect of a great tester like (1) Be disciplined, but stay creative.(2) Ask questions, find answers…

Continue reading3 failures of poor system operationalisation

Summary

Successful large system deployment failures is not merely due to poor testing of software. It is about poor operationalisation of software. This article outlines three major failures – Poor transition of software to end users, messed up business procedures and Data issues, the result of poor operationalisation. These have been curated from two articles.

FAIL #1 Avon : Poor transition of software to end users

In 2013 Avon’s $125 million SAP enterprise resource planning project failed after four years of work, development and employee testing.

ERP software can brag all it wants about functionality and all of the magical modules and apps you can use to make your business processes easier, but that won’t mean anything if your software isn’t actually usable. It’s all about aligning your software to your business processes, and if you can’t get staff to use your ERP, they won’t be carrying out the processes necessary to keep your business running. Make sure your employees are properly trained and transitioned into the new software, and that they want to use that system in the first place.

Read about this in detail at https://blog.datixinc.com/blog/erp-failure-stories

FAIL #2 Woolworth : Business procedures messed up

The Australian outpost of the venerable department store chain, affectionately known as “Woolies,” also ran into data-related problems as it transitioned from a system built 30 years ago in-house to SAP.

The day-to-day business procedures weren’t properly documented, and as senior staff left the company over the too-long six-year transition process, all that institutional knowledge was lost — and wasn’t able to be baked into the new rollout.

Read about this in detail at https://www.cio.com/article/2429865/enterprise-resource-planning-10-famous-erp-disasters-dustups-and-disappointments.html

FAIL #3 Target Canada : Data issues

Many companies rolling out ERP systems hit snags when it comes to importing data from legacy systems into their shiny new infrastructure. The company’s supply chain collapsed, and investigators quickly tracked the fault down to this supposedly fresh data, which was riddled with errors -items were tagged with incorrect dimensions, prices, manufacturers, you name it.

Thousands of entries were put into the system by hand by entry-level employees with no experience to help them recognise when they had been given incorrect information from manufacturers, working on crushingly tight deadlines. It was later found that only about 30 percent of the data in the system was actually correct.

Read about this in detail at https://www.cio.com/article/2429865/enterprise-resource-planning-10-famous-erp-disasters-dustups-and-disappointments.html

About SmartQA The theme of SmartQA is to explore various dimensions of smartness to leapfrog into the new age of software development, to accomplish more with less by exploiting our intellect along with technology. Towards this, we will strive to showcase interesting thoughts, expert industry views through high-quality content as articles, posters, videos, surveys outlined as a SmartQA Digest weekly emailer. SmartBites is “soundbites from smart people”. Ideas, thoughts and views to inspire you to think differently.

Signup to receive SmartQA digest that has something interesting weekly to becoming smarter in QA and delivering great products.

7 Thinking Tools to Test Rapidly

by T Ashok

Summary

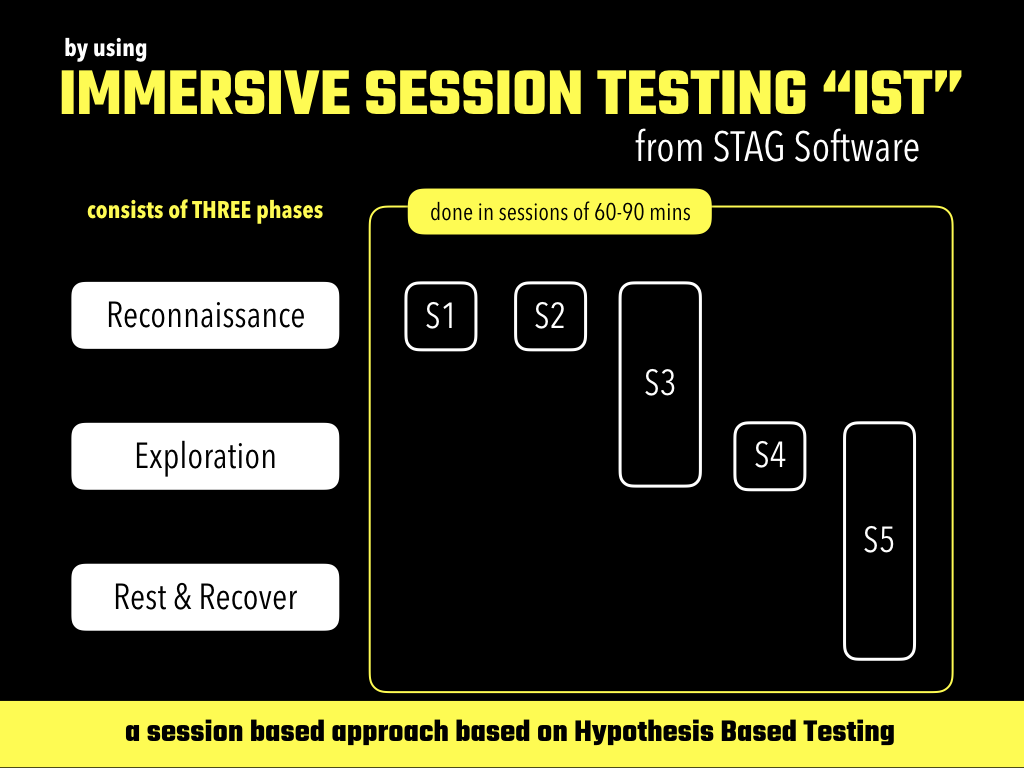

The act of testing is a scientific exploration of a system done in three phases – RECONNAISSANCE to understand and plan, SEARCH to look for issues, REST&RECOVER to analyse and course correct. To enable the various activities in each phase to be done quickly and effectively, is where the SEVEN Thinking Tools outlined in this article help. How to apply these tools in a session-based approach is also briefly outlined.

When I hear people talking about testing as Manual or Automated with the latter being the need of the hour, I am flabbergasted. All the word ‘manual’ conjures in my brain is that of me doing a menial job of painful scrubbing!

It is time we used Intellectual & Tool-supported. “Think well. Exploit tools to do.”Enough of rant!

In current times, speed is everything, right? What can we do to test quickly ? Use tools. Automate. Right? Wait a minute – This is about execution, right? What about prior activities?

To answer, let us ask the basic question what is testing after all? Testing is exploration. Let me correct it. Testing is scientific exploration. And exploration is a human activity that is aided by tools & technology. How can we do scientific exploration rapidly? By using tools that help us think better and do faster.

Let us say you want to explore the nearby mountain range by foot. Would you just pick up your backpack and go on? I bet not, unless it is a really short trip. Otherwise I think you will study the geography/terrain, read others’ experiences, do a reconnaissance, create various maps of terrain, of pit stops, of food joints etc before you chalk out the full route. Once the route is setup, you will pack your bags and go. As you explore, you will discover that “the map is not the terrain” and be taunted, surprised, challenged and you will learn, adjust, improvise, revise the maps, routes as needed. Tired, you will rest, analyse, replan & recover to continue on your journey. This is not ad-hoc nor driven by sheer bravado. This requires logical thinking(scientific), planning and ability to observe, adjust continuously and also some bravado and good luck!

This is what we can apply too in testing our software/systems. This article distils this and provides you with SEVEN THINKING TOOLS to enable you do these easily and scientifically.

Applying the above analogy, we look act of testing as being done in THREE phases: RECONNAISANCE, EXPLORATION, REST&RECOVER.

RECONNAISANCE : Do survey and create maps

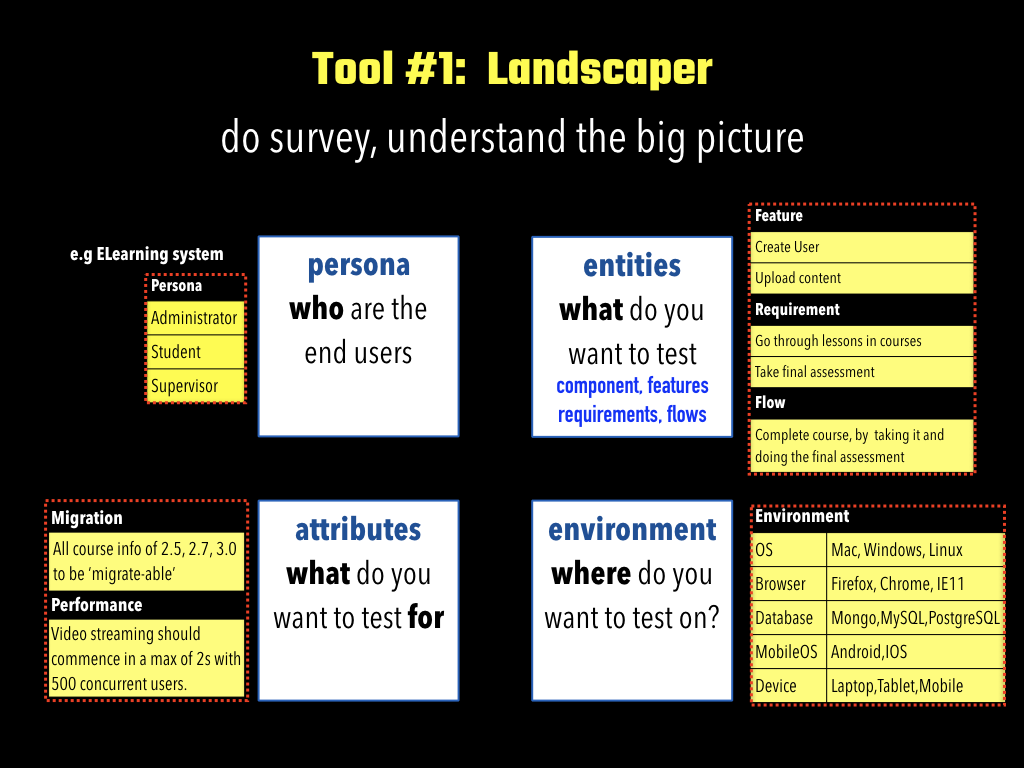

Survey : Get to understand the system under test by reading documents, playing with the software/system, discussing with people, to clearly understand who the end users are, what the entities (e.g. features, requirements..) to test are, what the various attributes the end users expect are, and the environment in which it will be deployed. In a nutshell we want to know the Who, What-to-test, What-to-test-for and Where. This is done by the Tool #1 “Landscaper”.

Create Maps: Now that you know the key information, connect them to four useful maps: Persona map, Scope map, Interaction map and Environment map.

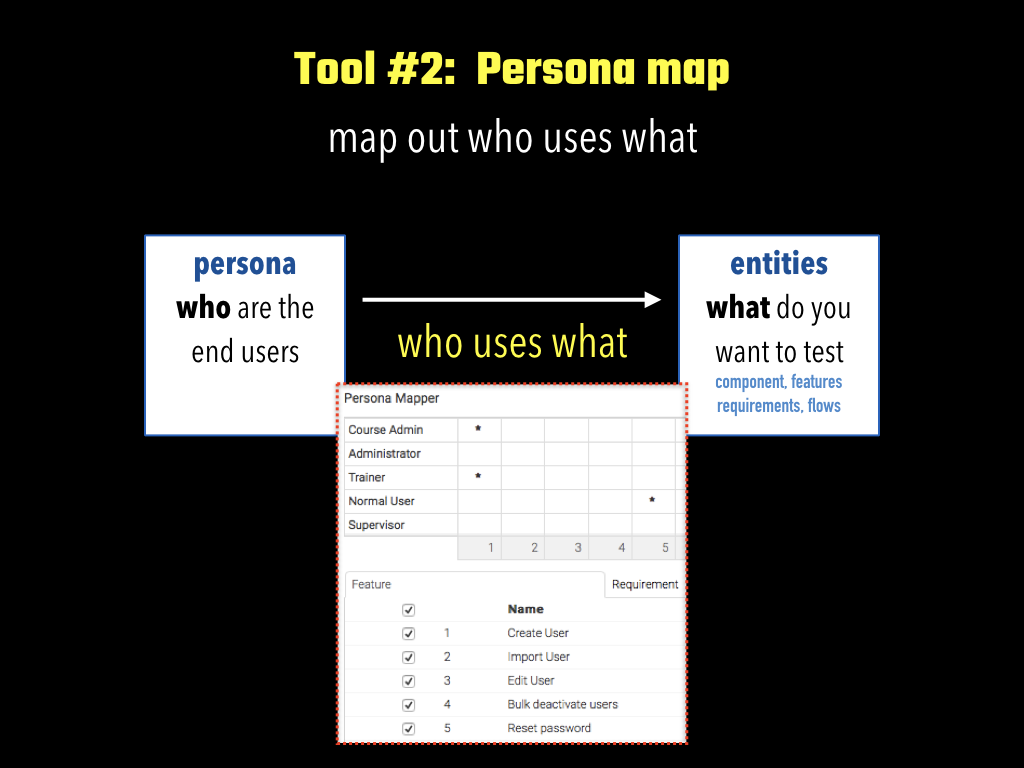

(Tool #2) Persona map : A list that clearly connects the “Who” to What”. This helps us understand who uses what and therefore helps us prioritise testing and certainly enables us to get user centric view to validation.

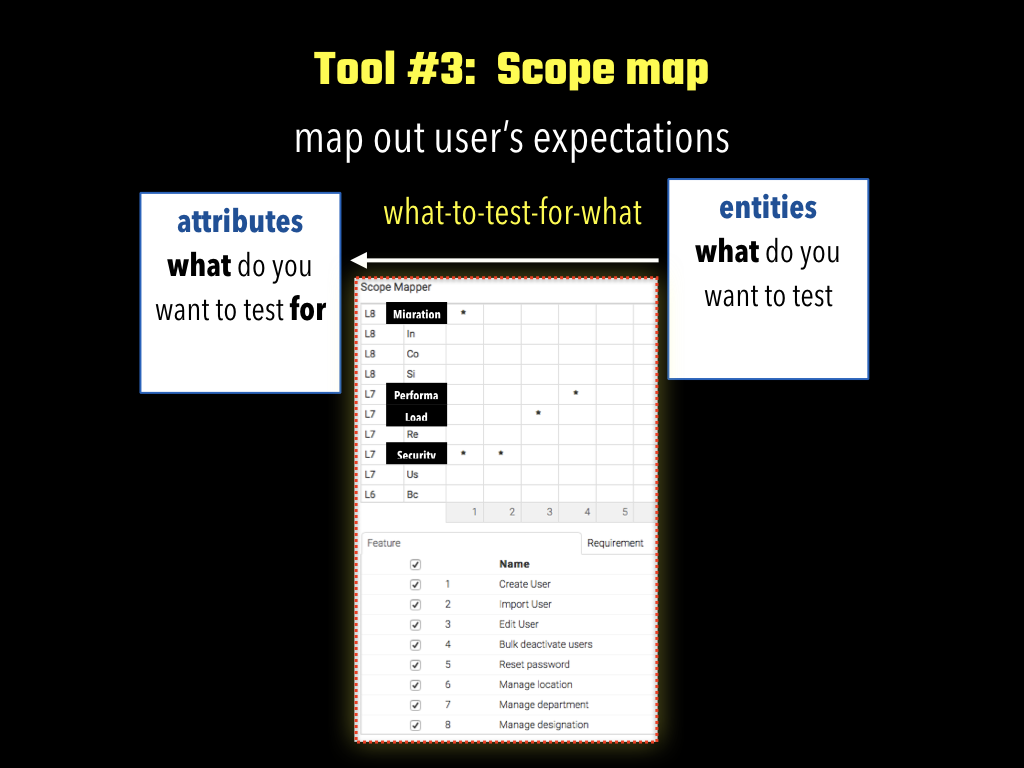

(Tool #3 )Scope map: A list that connects the ‘What’ to ‘What-for’. This helps us to understand what the expectations of the various entities are i.e. That for even feature F1, we have an expectation of performance. What does this help us do? This helps us identify the various types of tests to be done.

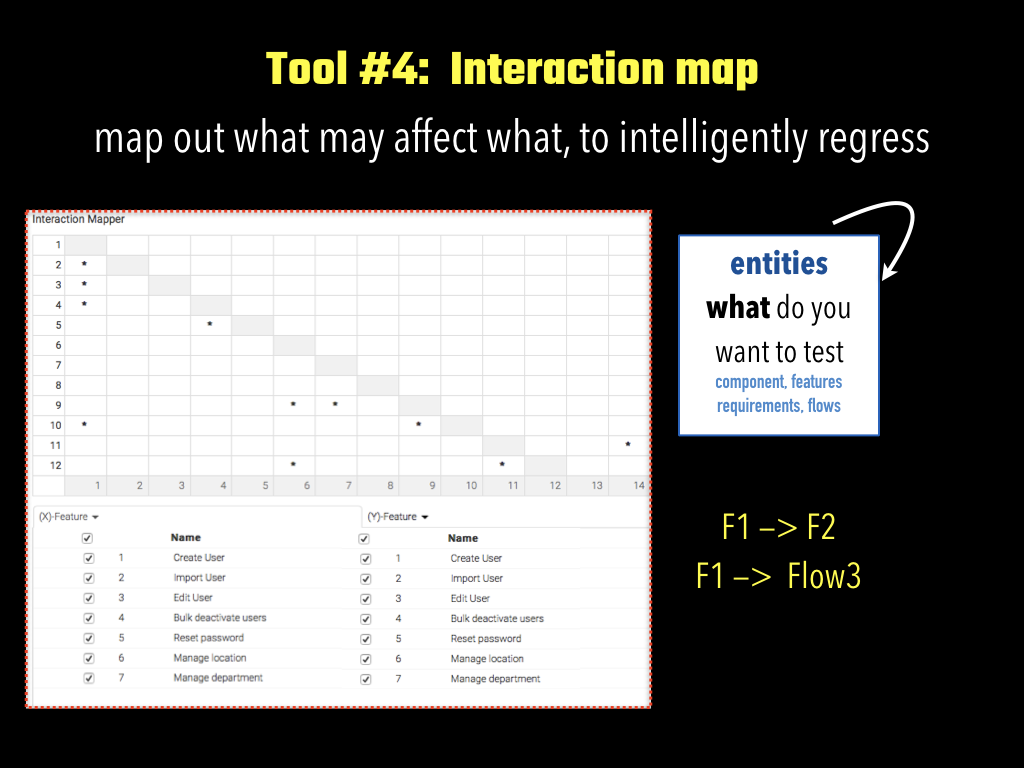

(Tool #4) Interaction map: No entity is an island i.e. each entity may affect one or more entities. i.e a feature F1 may affect another feature F2 and therefore a modification of F1 may require retesting of F2. How does his map help us? Well, this helps plan our regression strategy intelligently.

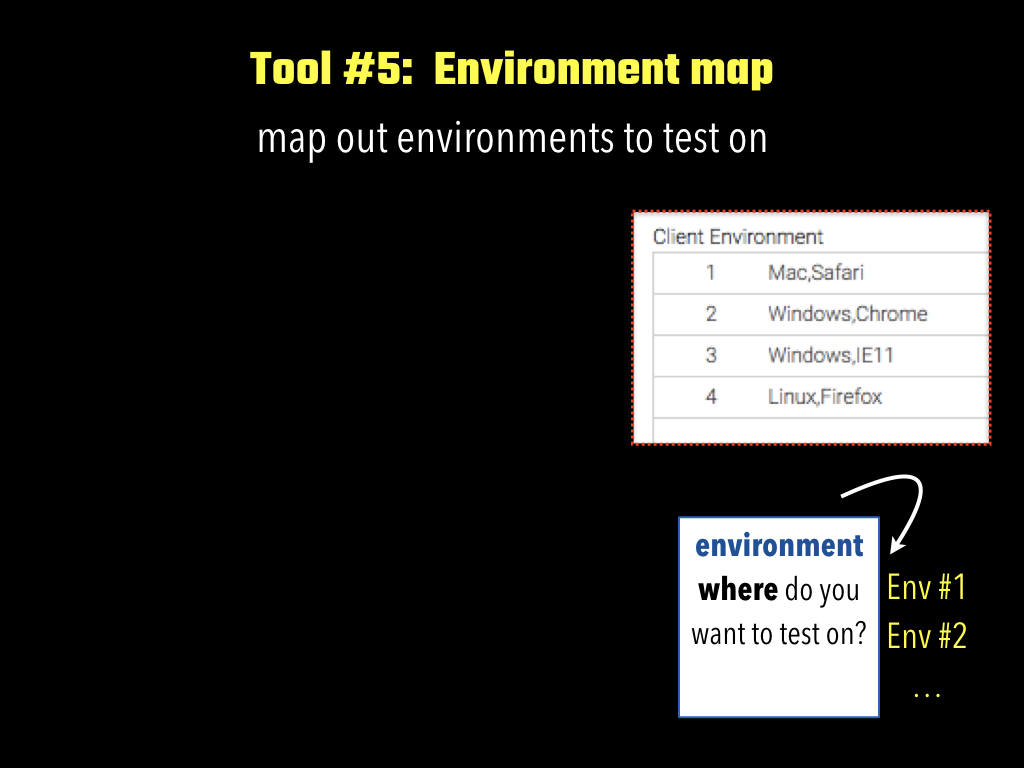

(Tool #5) Environment map : This lists out the various environments on which the final system may be run so that the functionality and attributes may be evaluated on various deployment environments.

Now that we have done done the reconnaissance, we should have good idea of the system under test and therefore be ready to explore.

SEARCH : Now that we have the maps, the next step would be chalk out the routes and then we are ready to commence our search for issues. This is done using the “Scenario creator” tool. Once this is done we commence our search for issues. When doing this we will encounter things we don’t know, things that we did not anticipate, issues and therefore will need to revise and course correct revise the landscape, maps and routes. This is accomplished via the Dashboard tool in the Rest&Recover phase.

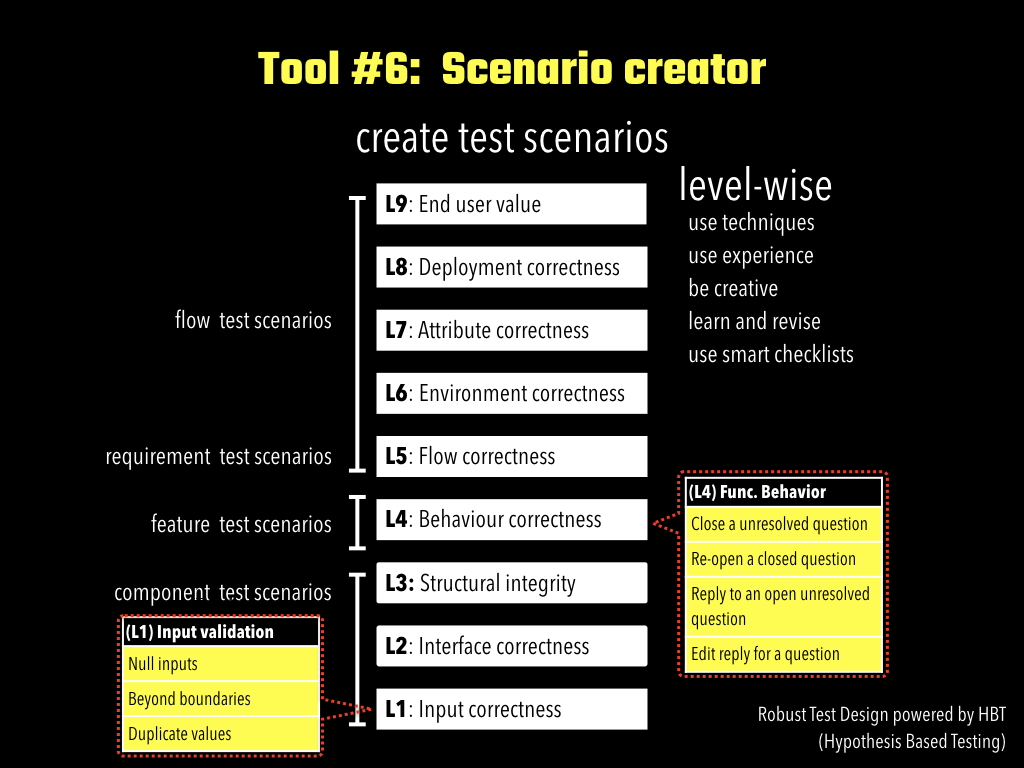

(Tool #6) Scenario creator: This tool helps to design the various test scenarios that would serve as the starting point. Note that these will be continuously revised as we explore and gain a deeper understand of the system and its context and usage. What is important would be segregate the scenarios into Levels so that the test scenarios are focused and clear in their objective. Robust Test Design approach of HBT helps you design scenarios that may be done using a mix of formal techniques, past experience, domain knowledge, context but clearly segregated into various HBT Quality Levels.

REST& RECOVER: In this phase, the objective is to analyse the exploration phase results and improve what we can do, track progress of doing and judge of the quality of the system-under-test. This is done by the tool ‘Dashboard’

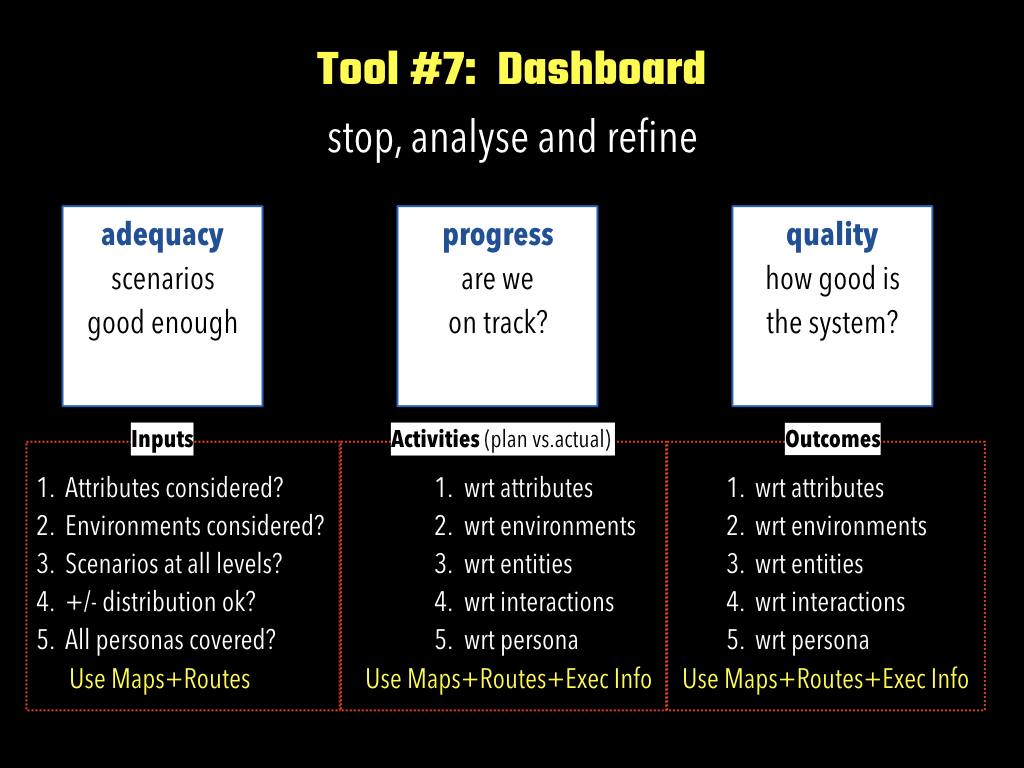

(Tool #7) Dashboard: This tool helps you to do there things : (a) judge adequacy by look at the map and route information and improve the same (b) track progress of work done by checking the what has been done vs.planned as far as routes are concerned (c) judge quality by looking at the execution outputs of the scenarios level wise.

So how do we apply this tools?

We saw that these 7 tools could be used in the THREE phases of RECONNAISSANCE, SEARCH, REST&RECOVER by a session based approach “Immersive Session Testing”. Each session is suggested to be short and focused say 60-90 minutes with a session objective to one or a mix of the phases.

Note that a session could be an exclusive RECONNAISSANCE or SEARCH or REST&RECOVER on a combination of these. Why is the session time suggested to be 60-90 minutes? Well this is to ensure razor sharp focus on each on the activity done. Also a short focussed sessions allow one to get into a state of flow enabling higher productivity and enjoy the activity!

Click here to play the webinar video.

Click here to see the slides in SlideShare

About SmartQA The theme of SmartQA is to explore various dimensions of smartness to leapfrog into the new age of software development, to accomplish more with less by exploiting our intellect along with technology. Towards this, we will strive to showcase interesting thoughts, expert industry views through high quality content as articles, posters, videos, surveys outlined as a SmartQA Digest weekly emailer. SmartBites is soundbites from smart people”. Ideas, thoughts and views to inspire you to think differently.

Signup to receive SmartQA digest that has something interesting weekly to becoming smarter in QA and delivering great products.